Deploy Magento 2 to Kubernetes ? ?

Magento 2 currently does not have a fully cloud native approach, and documentation lacks on this topic. We'll see below how to deploy immutable Docker images of Magento 2 on Kubernetes, make it possible to build once (ie. a tag) and deploy many times (ie. staging, preproduction, production).

This post is not a detailed how-to guide, but rather a compilation of solutions to known difficulties you'll face trying to deploy Magento to Kubernetes.

At the end of this post, you'll also find a list of many security/observability/availability related recommendations.

Building Docker image

Once the code copied and dependencies installed via Composer, Magento requires two major commands to be ran during build, which will not work out-of-the-box in this context (no access to database or external service):

- bin/magento setup:di:compile

- bin/magento setup:static-content:deploy

See our clickandmortar/magento-kubernetes GitHub repository for a complete Dockerfile example.

setup:di:compile

When ran with an existing app/etc/env.php, this command will try to connect to the database and/or the cache. To get around this, move it to a temporary location:

RUN mv app/etc/env.php app/etc/env.php.orig \

&& bin/magento setup:di:compile

Keep your app/etc/config.php in place, it is required for Magento to know which modules are enabled.

setup:static-content:deploy

This command needs to know the websites structure to work properly, you'll need to dump your database config to app/etc/config.php before building the image:

bin/magento app:config:dump

This file containing the whole Magento config may be reverted to it's simpler version containing only modules once static content is deployed, otherwise you wont be able to change any setting on your deployed environment (except using environment variables, which is recommended).

I'd recomment to keep both files:

- app/etc/config.php containing the modules list

- app/etc/config.docker.php containing the whole config, only useful at build time

Then within your Dockerfile:

RUN mv app/etc/config.php app/etc/config.php.orig \

&& mv app/etc/config.docker.php app/etc/config.php \

&& bin/magento setup:static-content:deploy \

--max-execution-time=3600 \

-t my/theme -t Magento/backend \

ja_JP de_DE es_ES ... \

&& mv app/etc/config.php.orig app/etc/config.php

For both commands above, you may need to increase the memory limit:

RUN php -d memory_limit=1024M bin/magento setup:...

Dynamic configuration

Magento allows to override any system configuration using environment variables, following the following patterns, replacing the slash character of config paths with double underscores:

- CONFIG__DEFAULT__DEV__JS__MERGE_FILES: sets value of dev/js/merge_files on default scope

- CONFIG__STORES__MYSTORECODE__WEB__UNSECURE__BASE_URL: sets value of web/unsecure/base_url for store with code mystorecode

- CONFIG__WEBSITES__MYWEBSITECODE__WEB__SECURE__BASE_URL: sets value of web/secure/base_url for website with code mywebsitecode

Note that these environment values take precedence over values in config.php and the database.

⚠️ Note that you need to use encrypted values for sensitive configuration (ie. payment gateway credentials) environment variables.

However, Magento does not offer a similar mechanism for values of env.php. Easy way to be able to define database connection info and such using environment variables, is taking advantage of PHP's getenv() function:

<?php

...

'db' => [

'table_prefix' => '',

'connection' => [

'default' => [

'host' => getenv('MAGENTO_DATABASE_HOST'),

'dbname' => getenv('MAGENTO_DATABASE_NAME'),

'username' => getenv('MAGENTO_DATABASE_USERNAME'),

'password' => getenv('MAGENTO_DATABASE_PASSWORD'),

'model' => 'mysql4',

'engine' => 'innodb',

'initStatements' => 'SET NAMES utf8;',

'active' => '1'

]

]

],

...

Deploying to Kubernetes

Architecture

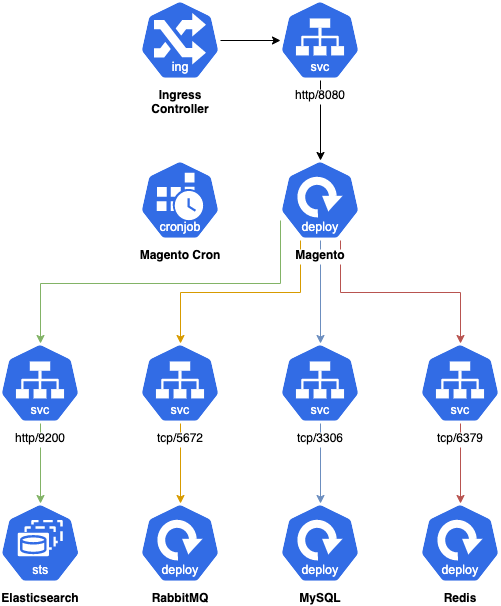

The schema below is an overview of the (simplified) target architecture of Magento running in Kubernetes:

Magento Kubernetes simplified architecture.

Usage of cloud managed services is recommended whenever possible.

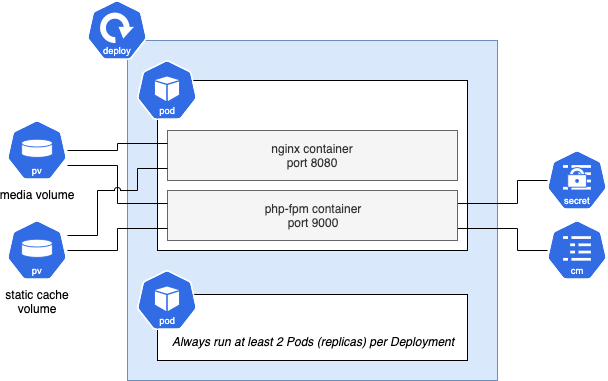

And below is a detailed view of the Magento Deployment part:

As you can see, Magento requires volumes to be shared between running Pods:

- media: contents of the pub/media directory, which holds product images for instance

- static cache: contents of the pub/static/_cache directory, where the minified/compressed CSS/JS assets will be created

To achieve this, you'll need a StorageClass allowing mounting a PersistentVolume with the ReadWriteMany access mode. You may use Kubernetes NFS provisioner or a managed service offering a RWX StorageClass (Google Cloud Filestore, Alicloud NAS, etc.).

Deploying using Helm

Most practical way to deploy Magento to Kubernetes is using Helm. It offers advanced templating features, release management and hooks.

When deploying a new release, Magento requires the setup:upgrade command to be executed, before the application is effectively deployed.

For this purpose, usage of Helm chart hooks is quite handy, as shown using the annotations in this example:

# upgrade-job.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: "{{ include "magento.fullname" . }}-pre-upgrade-job"

labels:

{{- include "magento.labels" . | nindent 4 }}

annotations:

"helm.sh/hook": pre-upgrade

"helm.sh/hook-weight": "1"

"helm.sh/hook-delete-policy": before-hook-creation

spec:

template:

metadata:

labels:

{{- include "magento.labels" . | nindent 8 }}

spec:

restartPolicy: Never

containers:

- name: php

image: {{ .Values.image.name }}:{{ .Values.image.tag }}

command: ["bin/magento", "setup:upgrade", "--keep-generated"]

envFrom:

- configMapRef:

name: magento-cm

- secretRef:

name: magento-secret

You may also need to define a post-install,post-upgrade hook to clear the cache once the release is fully deployed.

The rest of the Helm chart required to deployed Magento is quite straightforward, a good start is using Helm create command.

Still a lot to do

The deployment architecture described above is quite basic, and requires additional work on the following topics:

Security

- Containers should run as non-privileged users (www-data for php-fpm, nginx for nginx, etc.) and with minimal capabilities (see Gatekeeper)

- Containers should when possible run with their root filesystem in readonly mode (more on this topic coming in a further post)

- Last compatible version of PHP (and other as MySQL, RabbitMQ, etc.) should be used (you may automate this using Dependabot for Docker)

- Isolate your workloads using Network Policies

- Use a Service Account with minimal permissions

- Appropriate security headers should be sent by nginx or your Ingress Controller (see https://securityheaders.com/)

- Use a WAF if possible, for instance mod_security bundled in nginx ingress controller

- Set-up rate limiting rules, using for instance nginx ingress controller rules

- Keep your Docker images as thin as possible: do not install unnecessary packages/libraries and use alpine base images when possible

- Use a custom admin URL and restrict it's access using an HTTP authentification or IP restriction, in addition to Two-Factor Authentication (2FA)

- Set-up an automated security check of your Magento instance using their Security Scan tool

- Upgrade Magento as soon as possible when security fixes are released ; this has been made lot easier recently with security patch versions (ie. 2.3.x-p1)

- Automate security checks using your CI (see https://docs.gitlab.com/ee/user/application_security/sast/)

Monitoring & observability

- Expose Prometheus metrics for all your applications (see https://github.com/hipages/php-fpm_exporter for php-fpm)

- Monitor and analyse your Prometheus metrics using Grafana, AlertManager

- Use Monolog features to format your logs as JSON and add necessary metadata for further analysis

- Write your logs to standard output / error instead of files under var/log, and forward them to a centralized analysis tool (Elasticsearch + Kibana) using FluentD for instance

- Use an OpenTracing implementation (Jaeger, Zipkin), especially when working in distributed / headless mode

Availability & fault tolerance

- For long running processes, as queue consumers, you need them to be able to stop gracefuly when receiving a SIGTERM signal from the Docker daemon ; this can be achieved by catching signals (see https://github.com/magento/community-features/issues/280) ; for nginx, see https://pracucci.com/graceful-shutdown-of-kubernetes-pods.html

- Define Pod anti-affinity rules to avoid having all your Pods deployed on the same Node

- Always use a Pod Disruption Budget to ensure keeping enough Pods running at any time

- Define readiness and liveness probes on your workloads:

- Pod not ready: Kubernetes does not send trafic to the Pod (removed from Service's Endpoint)

- Pod not alive: Kubernetes restarts the Pod

- Fine-tune your rollingUpdate strategy to ensure keeping enough Pods during deployment of a new release

- When possible, deploy your Kubernetes cluster across multiple zones within a region (beware of volumes being attached to region once created)

- Use blue-green or canary pattern when deploying, using a service mesh and/or Flagger or any other tool

- Use a Horizontal Pod Autoscaler policy to auto-scale your workload, and your cluster (the latter requires a cluster autoscaler controller)

- Use managed services offered by your cloud provider whenever possible, especially for:

- MySQL: Google CloudSQL, AWS RDS/Aurora, Alibaba Cloud ApsaraDB RDS, etc.

- Elasticsearch: Elasticloud, AWS Elasticsearch Service, Alibaba Cloud Elasticsearch, etc.

- Redis: Google MemoryStore, AWS ElastiCache, Alibaba Cloud ApsaraDB Redis, etc.

- Frequently backup your data (using Velero for instance)